Kaleidoscope allows to concurrently load a webpage in two versions (e.g., different fonts, with vs without ads) that are shown to a participant side-byside.

As far as we know, it is the first testing tool to replay page loading through controlling visual changes on a webpage. Kaleidoscope can test two crucial user-perceived Web features – the style and page loading. In this paper we propose Kaleidoscope, an automated tool to evaluate Web features at large scale, quickly, accurately, and at a reasonable price. Further, the lack of a substantial user-base can be problematic to ensure statistical significance within a reasonable duration. The prohibitive costs of these approaches are an entry barrier for smaller players. invest a lot of time and money to perform online testing. Big companies like Google, Facebook, Amazon, etc. Today’s webpages development cycle consists of constant iterations with the goal to improve user retention, time spent on site, and overall quality of experience.

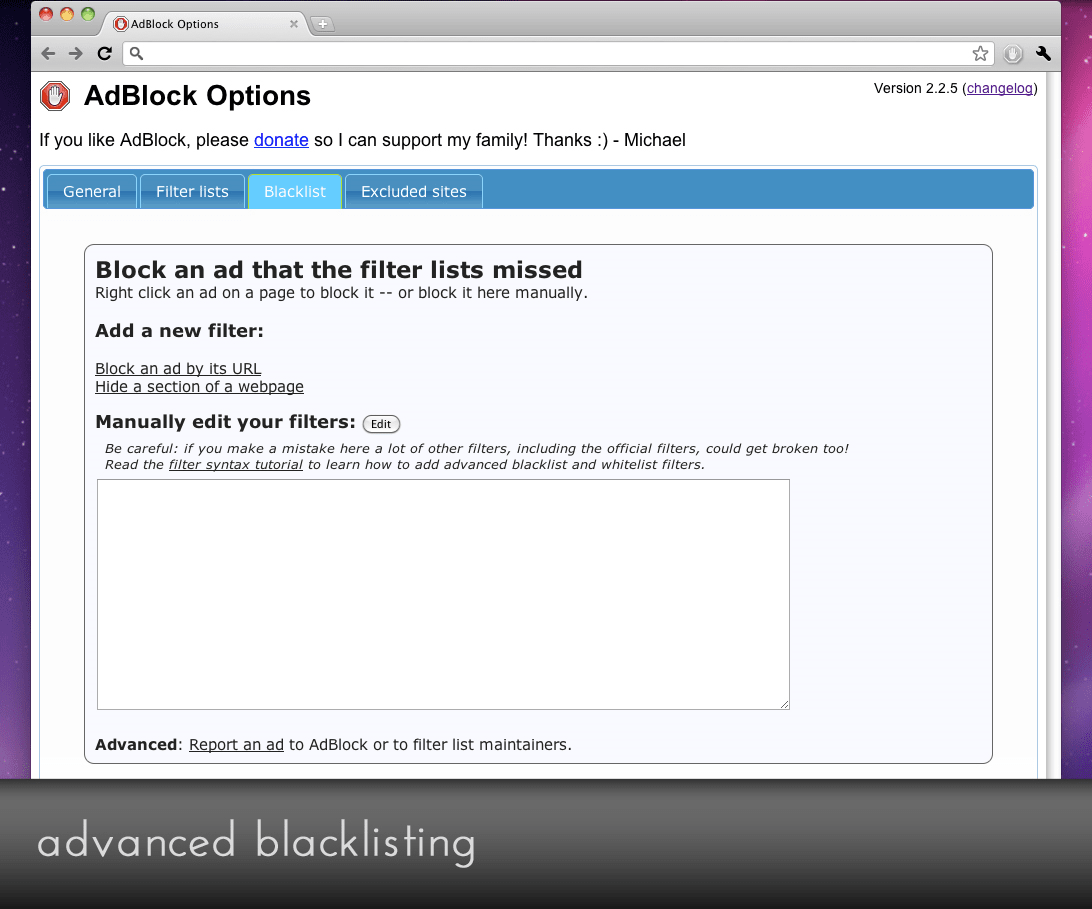

Besides, Web-LEGO achieves high visual accuracy (94.2%) and high scores from a paid survey: 92% of the feedback collected from 1,000 people confirm Web-LEGO's accuracy as well as positive interest in the service. Specifically, CDN-less websites provide more room for speedup than CDN-hosted ones, i.e., 7x more in the median case. The final evaluation shows that Web-LEGO brings significant improvements both in term of reduced Page Load Time (PLT) and user-perceived PLT. Then, we devise Web-LEGO, and address natural concerns on content inconsistency and copyright infringements. Further, we perform a reality check of this idea both in term of the prevalence of CDN-less websites, availability of similar content, and user perception of similar webpages via millions of scale automated tests and thousands of real users. Our rationale is to replace the slow original content with fast similar or equal content. The goal of this work is to build Web-LEGO, an opt-in service, to speedup webpages at client side. Content Distribution Networks (CDNs) extend it by replicating the same resources across multiple locations, and introducing multiple descriptors. The current Internet content delivery model assumes strict mapping between a resource and its descriptor, e.g., a JPEG file and its URL. We find that commonly used, and even novel and sophisticated PLT metrics fail to represent actual human perception of PLT, that the performance gains from HTTP/2 are imperceivable in some circumstances, and that not all ad blockers are created equal. Next, we showcase its functionalities via three measurement campaigns, each involving 1,000 paid participants, to 1) study the quality of several PLT metrics, 2) compare HTTP/1.1 and HTTP/2 performance, and 3) assess the impact of online advertisements and ad blockers on user experience. We validate Eyeorg's capabilities via a set of 100 trusted participants. Eyeorg overcomes the scaling and automation challenges of recruiting users and collecting consistent user-perceived quality measurements. In this paper we present Eyeorg (), a platform for crowdsourcing web quality of experience measurements.

#ADBLOCK ULTIMATE FOR CHROME 2.16 HOW TO#

In addition, companies like Google and SpeedCurve investigated how to measure "page load time" (PLT) in a way that captures human perception.

This effort has culminated in new protocols (QUIC, SPDY, and HTTP/2) as well as novel content delivery mechanisms. Tremendous effort has gone into the ongoing battle to make webpages load faster.

0 kommentar(er)

0 kommentar(er)